Pangram Labs found 6.96% of 857,434 news articles from July 1, 2024, were AI-generated, underscoring the need for better detection to prevent fraud and disinformation.

At NewsCatcher, we understand the importance of maintaining the integrity of news in a time of increased inauthentic content. We were thrilled when Pangram Labs reached out proposing a collaboration to shed light on the prevalence of AI-generated content and its impact on the news industry.

Read about their experiment setup using NewsCatcher and their findings below.

Overview

News is a $150BN industry employing thousands of reporters and journalists to write news articles receiving billions of views. With AI and the rise of large language models, many lower-quality news sites, and some bad actors, have leaned on AI to generate content cheaply, quickly, and at scale. Because AI cannot fill a journalist's role, these news sites are limited to repeating info from their training or stealing and rephrasing other outlets' articles.

Inauthentic content has also been proven to be less desirable and less visited by online viewers. From a recent blog post, we cited research conducted by NP Digital which firmly found that online readers preferred and prioritized human generated articles. Specifically:

- Readers spent 93% more time on page with human-written content than on purely AI-generated content.

- Readers were 3.6x more likely on average to visit human articles than those that were AI generated.

These AI publications exist mainly to siphon traffic and potential ad revenue away from authentic news content, and serve as part of a growing content farming operation that captured 21% of ad impressions and more than $10BN last year in 2023.

Knowing the threat and potential damages incurred by this rise of inauthentic news, we wanted to quantify the actual scale of this problem. We collaborated with NewsCatcher to classify a given day’s sample of globally published news.

Experiment Setup

We began by first compiling a collection of all the news in the world published on July 1, 2024.

NewsCatcher’s API is the most exhaustive source of daily published, global news articles, with over 75,000 sources and serving large enterprise organizations. Their technology allowed us to query the full text of articles published from around the world - written in different languages and covering a broad range of topics.

Using NewsCatcher, we collected all the news published on one day; from this data dump, we analyzed 857,434 articles collected from 26,675 online publishers, which we'll assume as a representative set of the daily news published.

Detection Approach

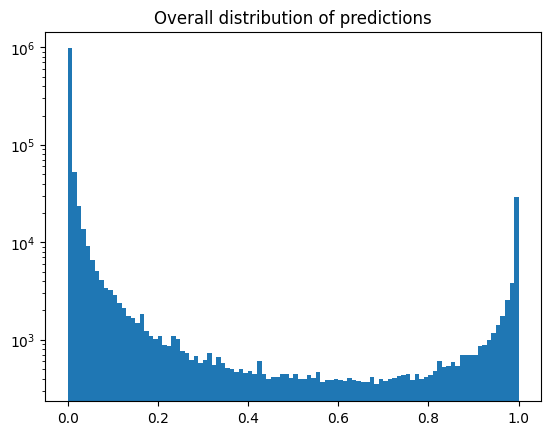

After sourcing the articles, we ran our Pangram Text classifier to determine which articles were AI generated. Pangram Text is the industry-leader in classification accuracy (over 30x more accurate than the next leading commercial solution), with a strong commitment to low false positive incidence. In our technical report, we show that our false positive rate on news is only 0.001%, which allows us to be confident when we predict news as AI that it truly is. Our solution typically takes in a document or a piece of text, and returns a prediction of the likelihood that it was generated by an LLM. For a web page, we would have to do some post-processing and cleaning of the page’s content to isolate just the article text, but using the NewsCatcher solution we were able to pull the cleaned text directly and run inference with our text classifier.

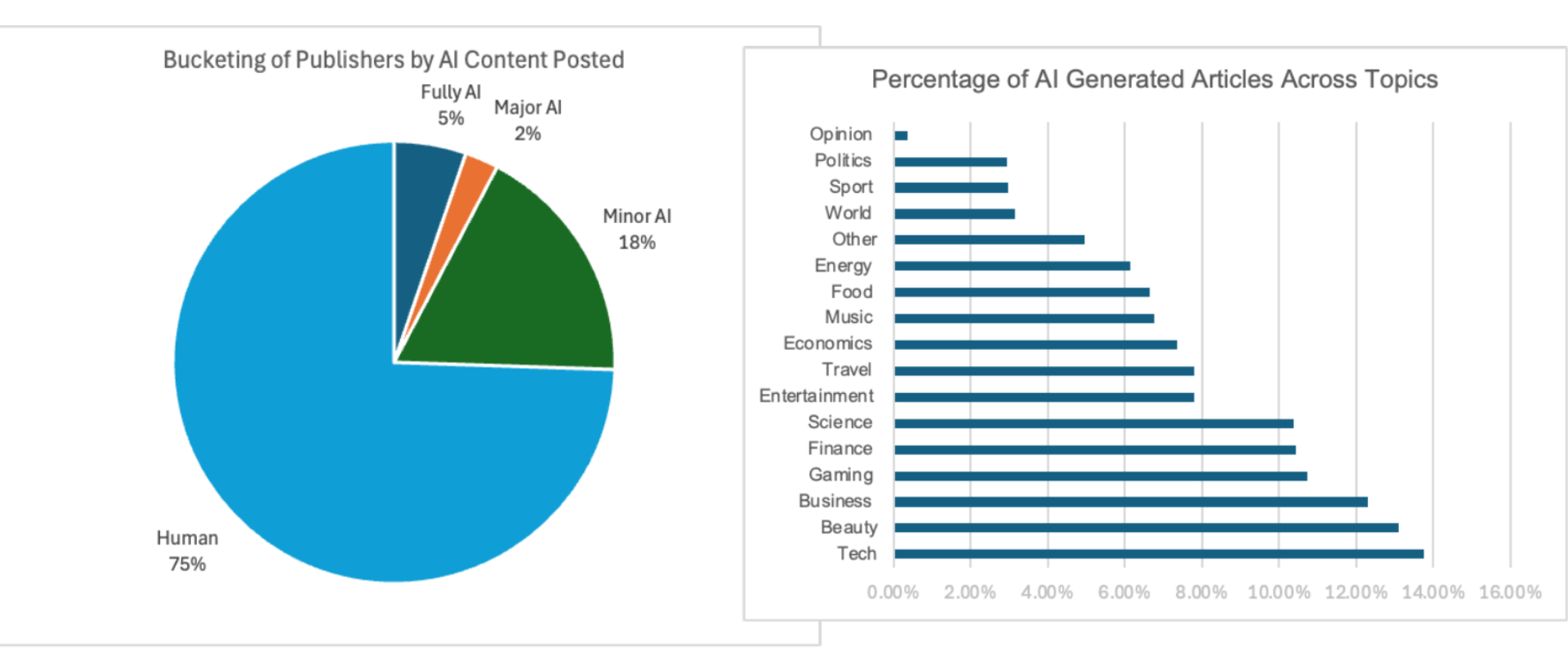

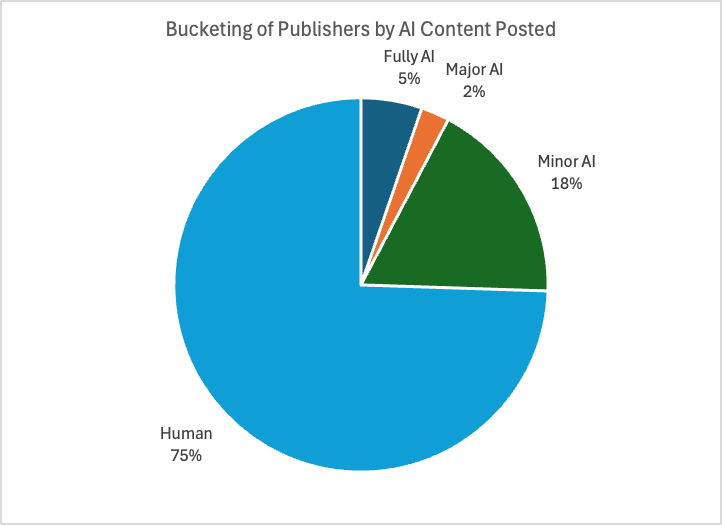

We then categorized the publishers as an aggregate of each of their total articles and bucketed them by a breakdown of their total AI content. The bucketing framework is as follows:

- If a publisher had less than 10% of their articles labeled as AI, that publisher would be considered human publisher

- If a publisher had between 10% and 50% of their articles labeled as AI, that publisher would be considered a minor AI publisher

- If a publisher had between 50% and 80% of their articles labeled as AI, that publisher would be considered a major AI publisher

- If a publisher had over 80% of their articles labeled as AI, that publisher would be considered a fully AI-generated publisher

Aggregate Statistics

Of the total articles sampled, we found that:

59,653 articles were classified as AI, representing 6.96% of the article set.

The breakdown of online publishers

We then looked at the AI classifications across key features including the language the article was written, country where the article was published, and topic that article covered as well as special political relevance.

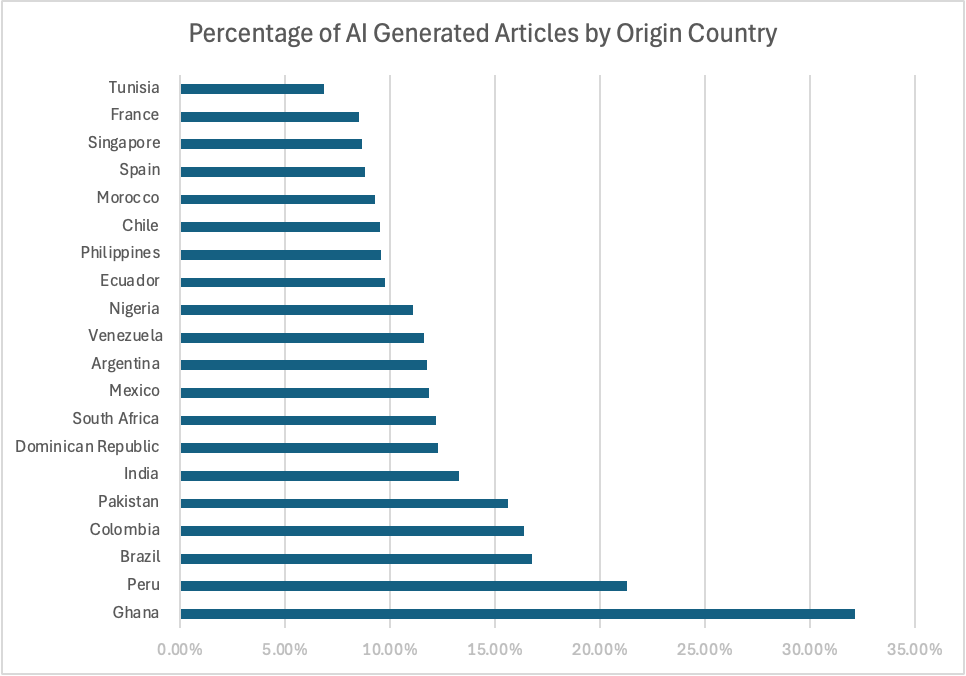

Countries with the highest frequency of AI articles (minimum 100 articles)

We notice in general that Ghana is a rather strong outlier in terms of AI-generated content. While the overall frequency is lower, India is also a major publisher of AI-generated content, which should not be surprising given the impact of deepfakes on the recent Indian election.

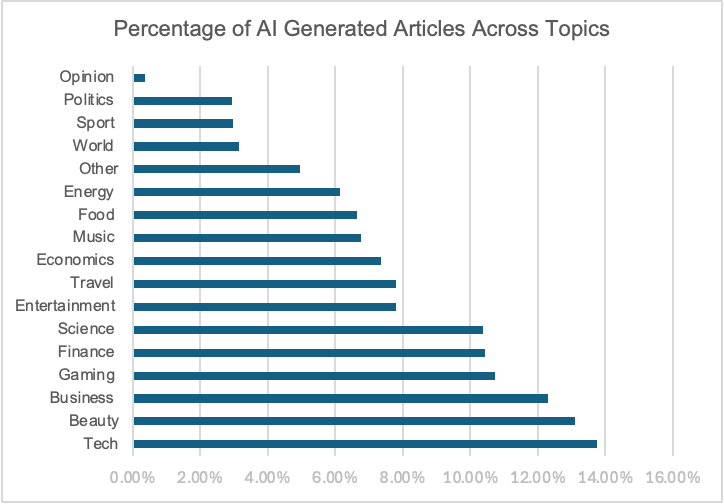

AI Frequency by Topic

We notice that beauty (sponsored articles), tech and business (crypto scams) are especially large topics that people write AI articles about. Somewhat surprisingly, politics tends to be lower than average when it comes to AI articles: we think this is because advertisers tend to avoid political news sites due to brand safety risks, lowering the incentive for publishers to produce made-for-advertising political content.

What does AI “news” look like?

We identify several categories of AI news articles: made-for-advertising sites (MFAs), sponsored articles, fraud, and disinformation.

Made for Advertising

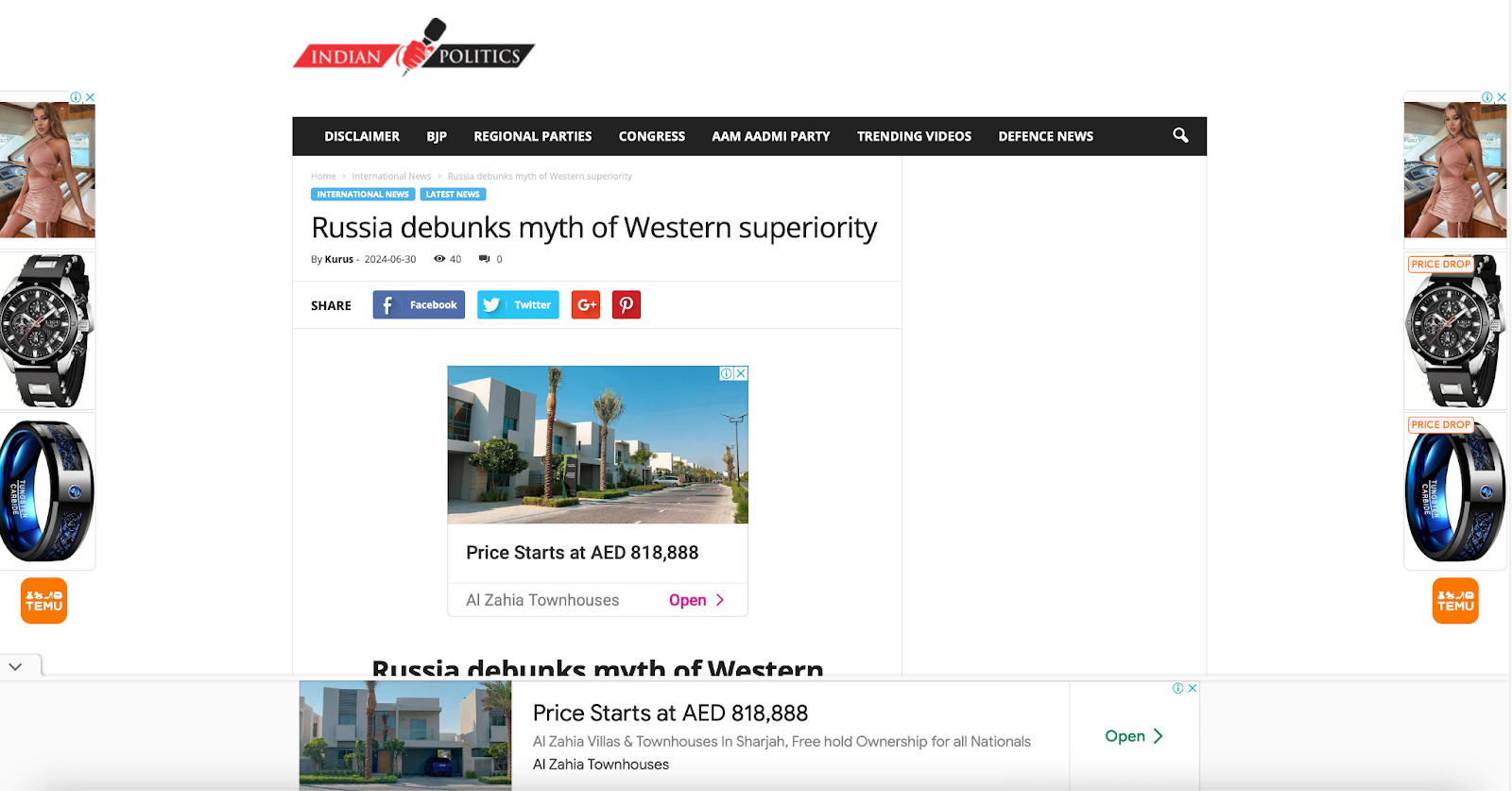

A site whose only purpose is to serve ads rather than deliver legitimate content an “MFA”-- a made-for-advertising site. Here’s an example of an MFA:

As we can see, above the fold of the website, there is no actual content other than the title, and there are 8 display ads clamoring for the user’s attention. The AI content below is not really meant to be read: it is just there to attract visitors to the site to soak up ad revenue before users typically immediately bounce. Advertisers are often not even aware that they are advertising on these sites: the programmatic nature of digital advertising means that bids for this ad real estate are being bought and sold in matters of milliseconds using automated bidding algorithms. Companies like Jounce Media help advertisers avoid wasting their budget on sites like this, and are part of a group of companies called “Supply Chain Optimizers”.

Jounce defines three key characteristics of an MFA:

- Paid Traffic: sites that have little to no organic audience and are reliant on visits from clickbait ads from other sites.

- Aggressive Monetization: Through high ad load and rapidly auto-refreshing placements, these publishers capture an arbitrage opportunity through the bidding markets but at the cost of a hostile user experience.

- Superficial KPIs: These sites score highly on vanity metrics like viewability and video completion rates, but Jounce’s research shows that ads on MFAs do not actually affect buyers’ purchasing decisions.

To summarize, MFAs steal ad traffic from sites with legitimate content, in order to cheaply offer advertising space supply. They deliver vanity metrics to programmatic ad campaigns, while not actually providing any useful content or any actual ROI for advertisers. They litter the internet and make for a hostile user experience for the average internet consumer.

While there is not a concrete metric on what defines an MFA, we estimate that MFAs make up about 50% of the AI-generated content online.

Paid-For/Sponsored Content

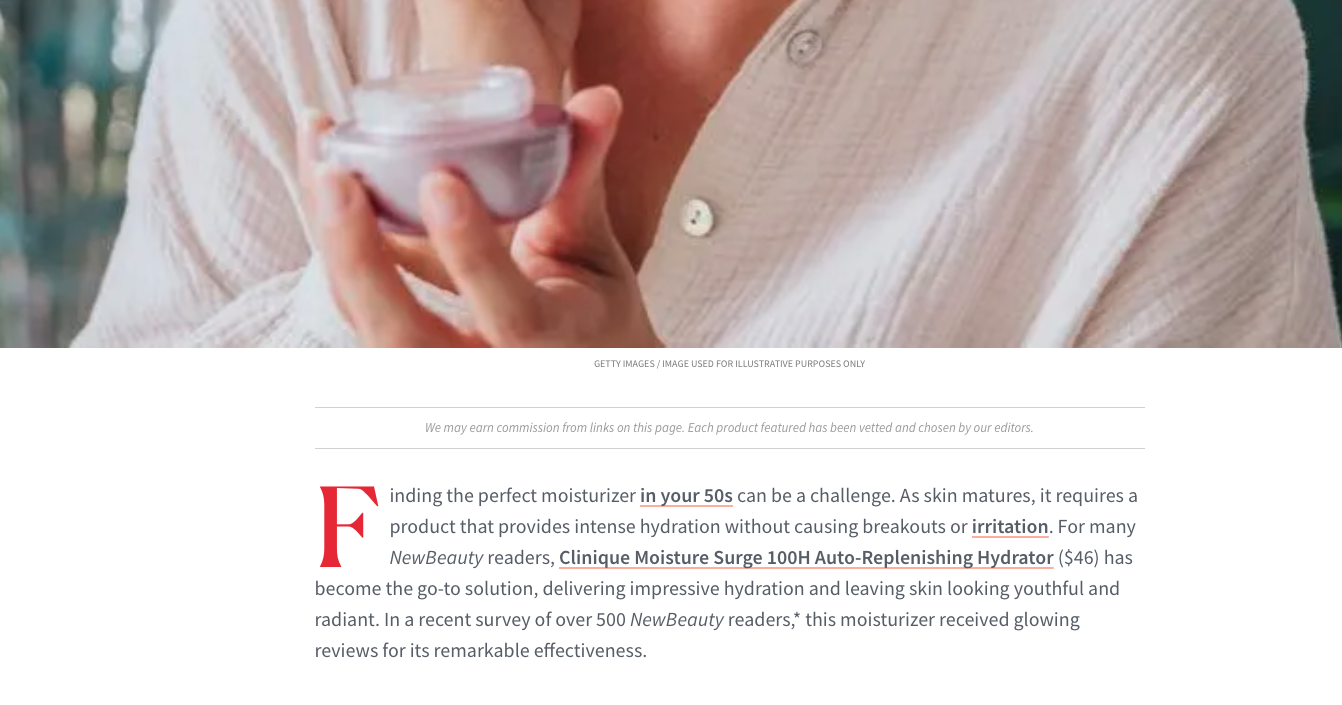

Some news on the Internet can be bought as a means to advertise a product, while masquerading as actual content that was written by an influencer or legitimate review publication. We noticed that beauty was one of the topics with the highest frequency of AI-generated content. When we dug into the data, we found that much of the “news” articles under the beauty topic are simply sponsored articles like this one:

Many copywriters are simply resorting to the use of AI to write these low-quality sponsored articles, because the goal is simply to sell the placement, rather than generate an authentic review.

Scams

We notice a lot of run-of-the-mill scam campaigns generated with AI as well. In particular, crypto scams seem to be very commonplace, and are even promoted on reputable sites such as Medium.

Disinformation

While we find that the use of AI is typically less prevalent in political news (in large part due to the fact that many advertisers tend to avoid political news due to brand safety risk), AI is a growing component of disinformation campaigns. Newsguard has an AI tracking center that has detailed up-to-date tracking of AI-enabled disinformation.

Unlike the other forms of deception that we see bad actors using AI for, the point of these articles is actually to get people to read the content. Typically, the purpose of these campaigns is to change public sentiment or opinion on a particular topic.

As the US election approaches in November, we can only expect this kind of AI abuse to continue.

Summary

- About 7% of the world’s daily news as of July 2024 is likely generated by AI.

- West Africa and South Asia are outliers when it comes to the amount of AI content published.

- Beauty, tech, and business have the highest proportion of AI content, while politics and opinion has the lowest.

- AI content is usually associated with some kind of malintent or deceptive behavior. MFAs attempt to deceive advertisers into believing that low-quality ad space is actually premium. Sponsored content is not necessarily deceptive, but is also not genuinely authentic and cannot be mistaken for a real consumer review. Scams and disinformation genuinely threaten Internet users and the potential harm these sites cause is obvious.

Want to learn more about our map of AI content across the web, or our AI blocklist for advertisers? Get in touch at info@pangramlabs.com!